Anthropogenic climate change is drastically affecting the natural processes of the Earth at unprecedented rates. Increased fossil fuel emissions coupled with global deforestation have altered Earth’s energy budget, creating the potential for positive feedback loops to further warm our planet. While some of this warming manifests through glacier melting, powerful storm systems, and rising global temperatures, it’s estimated that 93% of the total energy gained from the greenhouse effect is stored in the ocean, with the remaining 7% contributing to atmospheric warming (Cazenave et al. 2018, as cited in von Schuckmann et al. 2016). This storage of heat in the ocean is responsible for oceanic thermal expansion and in combination with glacier melt is contributing to global sea level rise. Currently, an estimated 230 million people live below 1 m of the high tide line and if we do not curb emissions, sea level rise projections range 1.1 – 2.1 m by 2100 (Kulp et al. 2019, Sweet et al. 2022). Sea level rise’s global impact has thus been a prominent area of scientific research with leading methods utilizing satellite altimetry to measure the ocean’s height globally over time.

Originating in the 1990s, surface sea level data has been recorded using a multitude of satellites amassing information from subseasonal to multi-decadal time scales (Cazenave et al. 2018). NASA’s sea level change portal reports this data sub-annually, recording a current sea level rise of 103.8 mm since 1993 (NASA). Seeking more information on the current trend of satellite altimetry, I reached out to French geophysicist Dr. Anny Cazenave of the French space agency CNES and director of Laboratoire d’Etudes en Geophysique et Oceanographie Spatiale (LEGOS) in Toulouse, France. Dr. Cazenave is a pioneer in geodesy, has worked as one of the leading scientists on numerous altimetry missions, was lead author of the sea level rise report for two Intergovernmental Panel on Climate Change (IPCC) reports, and recently won the prestigious Vetlesen Prize in 2020 (European Space Sciences Committee).

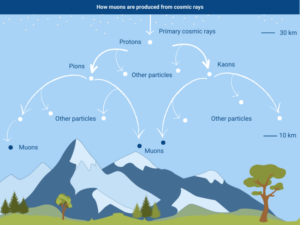

When asked about recent advancements in altimetry technology, Dr. Cazenave directed me towards the recent international Surface Water and Ocean Topography satellite mission (SWOT) launched in 2022. SWOT is able to detect ocean features with ten times the resolution of current technology, enabling fine-scale analysis of oceans, lakes, rivers, and much more (NASA SWOT). Specifically for measuring sea level rise, SWOT utilizes a Ka-band Radar Interferometer (KaRIn) which is capable of measuring the elevation of almost all bodies of water on Earth. KaRIn operates by measuring deflected microwave signals off of Earth’s surface using two antennas split 10 meters apart, enabling the generation of a detailed topographic image of Earth’s surface (NASA SWOT). With SWOT’s high-resolution capabilities for topographically mapping sea level change anomalies close to shore, more accurate estimations for how sea level rise can affect coastal communities will be accessible in the future.

Finally, in light of recent developments in AI and machine learning, Dr. Cazenave noted the power of these computational methods in analyzing large data sets. The high-precision data provided by SWOT requires advanced methods of analysis to physically represent sea level rise changes, posing a challenge for researchers (Stanley 2023). A few recent papers have already highlighted the use of neural networks that are trained on current altimetry and sea surface temperature data (Xiao et al. 2023, Martin et al. 2023). These neural networks are then able to decipher the high-resolution data, enabling for a greater understanding of ocean dynamics and sea surface anomalies. Dr. Cazenave explained that the key questions to answer regarding sea level rise are: (1) how will ice sheets contribute to future sea level rise, (2) how much will sea level rise in coastal regions, and (3) how will rising sea levels contribute to shoreline erosion and retreat. With novel computational analysis techniques and advanced sea surface monitoring, many of these questions are being answered with greater accuracy. As we navigate the effects of climate change, combining science and policy will allow us to design multifaceted solutions that enable a sustainable future for all.

References

- Anny Cazenave. European Space Sciences Committee. (n.d.). https://www.essc.esf.org/panels-members/anny-cazenave%E2%80%8B/

- Cazenave, A., Palanisamy, H., & Ablain, M. (2018). Contemporary sea level changes from satellite altimetry: What have we learned? What are the new challenges? Advances in Space Research, 62(7), 1639–1653. https://doi.org/10.1016/j.asr.2018.07.017

- Home. (n.d.). NASA Sea Level Change Portal. Retrieved April 24, 2024, from https://sealevel.nasa.gov/

- Joint NASA, CNES Water-Tracking Satellite Reveals First Stunning Views. (n.d.). NASA SWOT. Retrieved April 24, 2024, from https://swot.jpl.nasa.gov/news/99/joint-nasa-cnes-water-tracking-satellite-reveals-first-stunning-views

- Kulp, S. A., & Strauss, B. H. (2019). New elevation data triple estimates of global vulnerability to sea-level rise and coastal flooding. Nature Communications, 10(1), 4844. https://doi.org/10.1038/s41467-019-12808-z

- Martin, S. A., Manucharyan, G. E., & Klein, P. (2023). Synthesizing Sea Surface Temperature and Satellite Altimetry Observations Using Deep Learning Improves the Accuracy and Resolution of Gridded Sea Surface Height Anomalies. Journal of Advances in Modeling Earth Systems, 15(5), e2022MS003589. https://doi.org/10.1029/2022MS003589

- Stanley, S. (2023, October 17). Machine Learning Provides a Clearer Window into Ocean Motion. Eos. http://eos.org/research-spotlights/machine-learning-provides-a-clearer-window-into-ocean-motion

- Xiao, Q., Balwada, D., Jones, C. S., Herrero-González, M., Smith, K. S., & Abernathey, R. (2023). Reconstruction of Surface Kinematics From Sea Surface Height Using Neural Networks. Journal of Advances in Modeling Earth Systems, 15(10), e2023MS003709. https://doi.org/10.1029/2023MS003709

- von Schuckmann, K., Palmer, M., Trenberth, K. et al. An imperative to monitor Earth’s energy imbalance. Nature Clim Change 6, 138–144 (2016). https://doi.org/10.1038/nclimate2876