What makes life life? Is there underlying code that, when written or altered, can be used to replicate or even create life? On February 19th 2025, scientists from Arc Institute, NVIDIA, Stanford, Berkeley, and UC San Francisco released Evo 2, a generative machine learning model that may help answer these questions. Unlike its precursor Evo 1, which was released a year earlier, Evo 2 is trained on genomic data of eukaryotes as well as prokaryotes. In total, it is trained on 9.3 trillion nucleotides from over 130,000 genomes, making it the largest AI model in biology. You can think of it as ChatGPT for creating genetic code—only it “thinks” in the language of DNA rather than human language, and it is being used to solve the most pressing health and disease challenges (rather than calculus homework).

Computers, defined broadly, are devices that store, process, and display information. Digital computers, such as your laptop or phone, function based on binary code—the most basic form of computer data composed of 0s and 1s, representing a current that is on or off. Evo 2 centers around the idea that DNA functions as nature’s “code,” which, through protein expression and organismal development, creates “computers” of life. Rather than binary, organisms function according to genetic code, made up of A, T, C, G, and U–the five major nucleotide bases that constitute DNA and RNA.

Although Evo 2 can potentially design code for artificial life, it has not yet designed an entire genome and is not being used to create artificial organisms. Instead, Evo 2 is being used to (1) predict genetic abnormalities and (2) generate genetic code.

Accurate over 90% of the time, Evo 2 can predict which BRCA1 (a gene central to understanding breast cancer) mutations are benign versus potentially pathogenic. This is big, since each gene is composed of hundreds and thousands of nucleotides, and any mutation in a single nucleotide (termed a Single Nucleotide Variant, or SNV) could have drastic consequences for the protein structure and function. Thus, being able to computationally pinpoint dangerous mutations reduces the amount of time and money spent testing each mutation in a lab, and paves the way for developing more targeted drugs.

Secondly, Evo 2 can design genetic code for highly specialized and controlled proteins which provide many fruitful possibilities for synthetic biology (making synthetic molecules using biological systems), from pharmaceuticals to plastic-degrading enzymes. It can generate entire mitochondrial genomes, minimal bacterial genomes, and entire yeast chromosomes–a feat that had not been done yet.

A notable perplexity of eukaryotic genomes is their many-layered epigenomic interactions: the complex power of the environment in controlling gene expression. Evo 2 works around this by using models of epigenomic structures, made possible through inference-time scaling. Put simply, inference-time scaling is a technique developed by NVIDIA that allows AI models to take time to “think” by evaluating multiple solutions before selecting the best one.

How is Evo 2 so knowledgeable, despite only being one year old? The answer lies in deep learning.

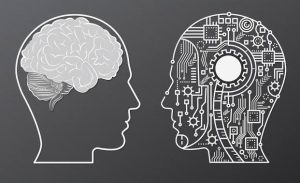

Just as in Large Language Models, or LLMs (think: ChatGPT, Gemini, etc.), Evo 2 decides what genes should look like by “training” on massive amounts of previously known data. Where LLMs train on previous text, Evo 2 trains on entire genomes of over 130,000 organisms. This training—the processing of mass amounts of data—is central to deep learning. In training, individual pieces of data called tokens are fed into a “neural networks”—a fancy name for a collection of software functions that are communicate data to one another. As their name suggests, neural networks are modeled after the human nervous system, which is made up of individual neurons that are analogous to software functions. Just like brain cells, “neurons” in the network can both take in information and produce output by communicating with other neurons. Each neural network has multiple layers, each with a certain number of neurons. Within each layer, each neuron sends information to every neuron in the next layer, allowing the model to process and distill large amounts of data. The more neurons involved, the more fine-tuned the final output will be.

This neural network then attempts to solve a problem. Since practice makes perfect, the network attempts the problem over and over; each time, it strengthens the successful neural connections while diminishing others. This is called adjusting parameters, which are variables within a model that can be adjusted, dictating how the model behaves and what it produces. This minimizes error and increases accuracy. Evo 2 was trained with 7b and 40b parameters to have a 1 million token context window, meaning the genomic data was fed through many neurons and fine-tuned many times.

The idea of anyone being able to create genetic code may spark fear; however, Evo 2 developers have prevented the model from returning productive answers to inquiries about pathogens, and the data set was carefully chosen to not include pathogens that infect humans and complex organisms. Furthermore, the positive possibilities of Evo 2 usage are likely much more than we are currently aware of: scientists believe Evo 2 will advance our understanding of biological systems by generalizing across massive genomic data of known biology. This may reveal higher-level patterns and unearth more biological truths from a birds-eye view.

It’s important to note that Evo 2 is a foundational model, emphasizing generalist capabilities over task-specific optimization. It was intended to be a foundation for scientists to build upon and alter for their own projects. Being open source, anyone can access the model code and training data. Anyone (even you!) can even generate their own strings of genetic code with Evo Designer.

Biotechnology is rapidly advancing. For example, DNA origami allows scientists to fold DNA into highly specialized nanostructures of any shape–including smiley faces and China–potentially allowing scientists to use DNA code to design biological robots much smaller than any robot we have today. These tiny robots can target highly specific areas of the body, such as receptors on cancer cells. Evo 2, with its designing abilities, opens up many possibilities for DNA origami design. From gene therapy, to mutation-predictions, to miniature smiley faces, it is clear that computation is becoming increasingly important in understanding the most obscure intricacies of life—and we are just at the start.

, , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , ,