Computer vision (CV) is a field of computer science that allows computers to “see” or, in more technical terms, recognize, analyze, and respond to visual data, such as videos and images. CV is widely used in our daily lives, from something as simple as recognizing handwritten text to something as complex as analyzing and interpreting MRI scans. With the advent of AI in the last few years, CV has also been improving rapidly. However, just like any subfield of AI nowadays, CV has its own set of ethical, social, and political implications, especially when used to analyze people’s visual data.

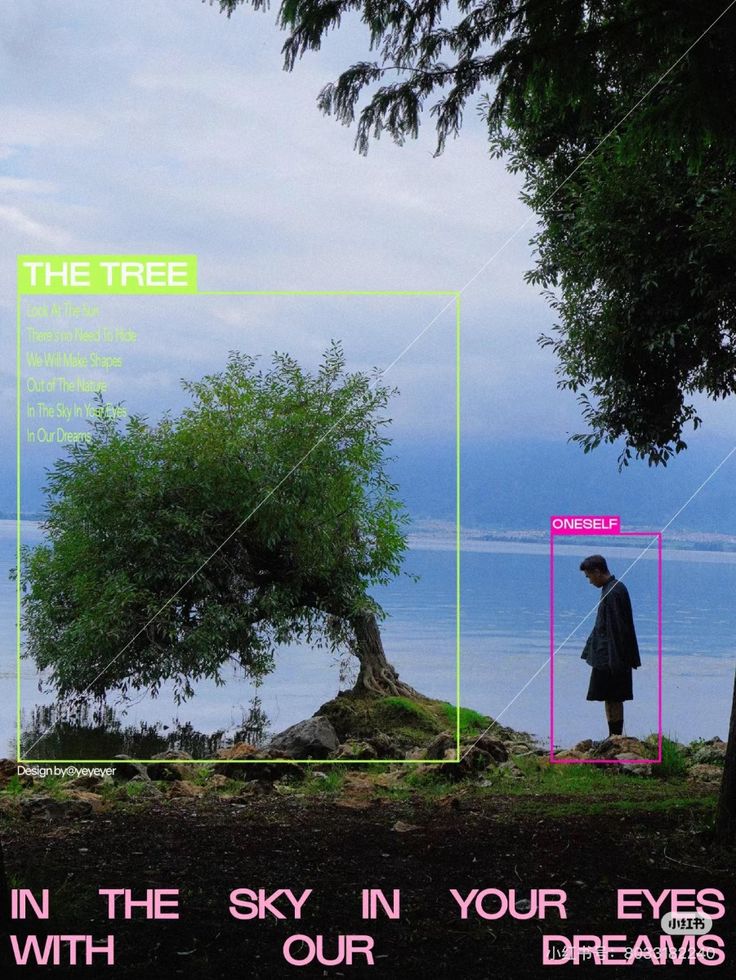

Although CV has been around for some time, there is limited work on its ethical limitations in the general AI field. Among the existing literature, authors categorized six ethical themes, which are espionage, identity theft, malicious attacks, copyright infringement, discrimination, and misinformation [1]. As seen in Figure 1, one of the main CV applications is face recognition, which could also lead to issues of error, function creep (the expansion of technology beyond its original purposes), and privacy. [2].

To discuss CV’s ethics, the authors of the article take a critical approach to evaluating the implications through the framework of power dynamics. The three types of power that are analyzed are dispositional, episodic, and systemic powers [3].

Dispositional Power

Dispositional power is defined as the ability to bring out a significant outcome [4]. When people gain that power, they feel empowered to explore new opportunities, and their scope of agency increases (they become more independent in their actions) [5]. However, CV can threaten this dispositional power in several ways, ultimately reducing people’s autonomy.

One way CV disempowers people is by limiting their information control. Since CV works with both pre-existing and real-time camera footage, people might be often unaware that they are being recorded and often cannot avoid that. This means that technology makes it hard for people to control the data that is being gathered about them, and protecting their personal information might get as extreme as hiding their faces.

Apart from people being limited in controlling what data is being gathered about them, advanced technologies make it extremely difficult for an average person to know what specific information can be retrieved from visual data. Another way CV might disempower people of following their own judgment is through communicating who they are for them (automatically inferring people’s race, gender, and mood), creating a forced moral environment (where people act from fear of being watched rather than their own intentions), and potentially leading to over-dependence on computers (e.g., relying on face recognition for emotion interpretations).

In all these and other ways, CV undermines the foundation of dispositional power by limiting people’s ability to control their information, make independent decisions, express themselves, and act freely.

Episodic Power

Episodic power, or as often referred to as power-over, defines the direct exercise of power by one individual or group over another. CV can both give new power or improve the efficiency of existing one [6]. While this isn’t always a bad thing (for example, parents watching over children), problems arise when CV makes that control too invasive or one-sided—especially in ways that limit people’s freedom to act independently.

With CV taking security cameras to the next level, opportunities such as baby-room monitoring or fall detection for elderly people open up to us. However, it also leads to the issues of surveillance automation, which can lead to over-enforcement in scales as small as private individuals to bigger corporations (workplaces, insurance companies, etc.). Another power dynamic shifts that need to be considered, for example, when the smart doorbells show far beyond the person at the door and might violate a neighbor’s privacy by creating peer-to-peer surveillance.

These examples show that while CV may offer convenience or safety, it can also tip power balances in ways that reduce personal freedom and undermine one’s autonomy.

Systemic Power

Systematic power is not viewed as an individual exercise of power, but rather a set of societal norms and practices that affect people’s autonomy by determining what opportunities people have, what values they hold, and what choices they make. CV can strengthen the systematic power by making law enforcement more efficient through smart cameras and increase businesses’ profit through business intelligence tools.

However, CV can also reinforce the pre-existing systematic societal injustices. One example of that might be flawed facial recognition, when the algorithms are more likely to recognize White people and males [7], which led to a number of false arrests. This might lead to people receiving unequal opportunities (when biased systems are used for hiring process), or harm their self-worth (when falsely recognized as a criminal).

Another matter of systematic power is the environmental cost of CV. AI systems rely on vast amounts of data, which requires intensive energy for processing and storage. As societies become increasingly dependent on AI technologies like CV, those trying to protect the environment have little ability to resist or reshape these damaging practices. The power lies with tech companies and industries, leaving citizens without the means to challenge the system. When the system becomes harder to challenge or change, that’s when the ethical concerns regarding CV arise.

Conclusion

Computer Vision is a powerful tool that keeps evolving each year. We already see numerous applications of it in our daily lives, starting from the self-checkouts in the stores and smart doorbells to autonomous vehicles and tumor detections. With the potential that CV holds in improving and making our lives safer, there are a number of ethical limitations that should be considered. We need to critically examine how CV affects people’s autonomy, might cause one-sided power dynamics, and reinforces societal prejudices. As we are rapidly transitioning into the AI-driven world, there is more to come in the field of computer vision. However, in the pursuit of innovation, we should ensure the progress does not come at the cost of our ethical values.

References:

[1] Lauronen, M.: Ethical issues in topical computer vision applications. Information Systems, Master’s Thesis. University of Jyväskylä. (2017). https://jyx.jyu.fi/bitstream/handle/123456789/55806/URN%3aNBN%3afi%3ajyu-201711084167.pdf?sequence=1&isAllowed=y

[2] Brey, P.: Ethical aspects of facial recognition systems in public places. J. Inf. Commun. Ethics Soc. 2(2), 97–109 (2004). https:// doi.org/10.1108/14779960480000246

[3] Haugaard, M.: Power: a “family resemblance concept.” Eur. J. Cult. Stud. 13(4), 419–438 (2010)

[4] Morriss, P.: Power: a philosophical analysis. Manchester University Press, Manchester, New York (2002)

[5] Morriss, P.: Power: a philosophical analysis. Manchester University Press, Manchester, New York (2002)

[6] Brey, P.: Ethical aspects of facial recognition systems in public places. J. Inf. Commun. Ethics Soc. 2(2), 97–109 (2004). https://doi.org/10.1108/14779960480000246

[7] Buolamwini, J., Gebru, T.: Gender shades: intersectional accuracy disparities in commercial gender classification. Conference on Fairness, Accountability, and Transparency, pp. 77–91 (2018) Coeckelbergh, M.: AI ethics. MIT Press (2020)